39 soft labels machine learning

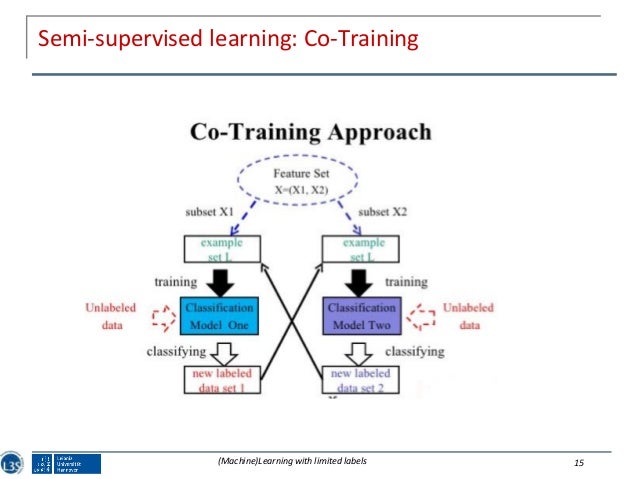

PDF Efficient Learning with Soft Label Information and Multiple Annotators Note that our learning from auxiliary soft labels approach is complementary to active learning: while the later aims to select the most informative examples, we aim to gain more useful information from those selected. This gives us an opportunity to combine these two 3 approaches. 1.2 LEARNING WITH MULTIPLE ANNOTATORS PDF When does label smoothing help? - NeurIPS The generalization and learning speed of a multi-class neural network can often be significantly improved by using soft targets that are a weighted average of the ... a range of tasks, including image classification, speech recognition, and machine translation (Table 1). Szegedy et al. [6] originally proposed label smoothing as a strategy ...

A Gentle Introduction to Cross-Entropy for Machine Learning Cross-entropy is commonly used in machine learning as a loss function. Cross-entropy is a measure from the field of information theory, building upon entropy and generally calculating the difference between two probability distributions. It is closely related to but is different from KL divergence that calculates the relative entropy between two probability distributions, whereas cross-entropy

Soft labels machine learning

en.wikipedia.org › wiki › Machine_learningMachine learning - Wikipedia Machine learning (ML) is a field of inquiry devoted to understanding and building methods that 'learn', that is, methods that leverage data to improve performance on some set of tasks. machine learning - What are soft classes? - Cross Validated You can't do that with hard classes, other than create two training instances with two different labels: x -> [1, 0, 0, 0, 0] x -> [0, 0, 1, 0, 0] As a result, the weights will probably bounce back and forth, because the two examples push them in different directions. That's when soft classes can be helpful. › blog › unsupervised-machine-learningUnsupervised Machine Learning: Examples and Use Cases - AltexSoft More often than not unsupervised learning deals with huge datasets which may increase the computational complexity. Despite these pitfalls, unsupervised machine learning is a robust tool in the hands of data scientists, data engineers, and machine learning engineers as it is capable of bringing any business of any industry to a whole new level.

Soft labels machine learning. Learning Soft Labels via Meta Learning - Apple Machine Learning Research The learned labels continuously adapt themselves to the model's state, thereby providing dynamic regularization. When applied to the task of supervised image-classification, our method leads to consistent gains across different datasets and architectures. For instance, dynamically learned labels improve ResNet18 by 2.1% on CIFAR100. Label smoothing with Keras, TensorFlow, and Deep Learning This type of label assignment is called soft label assignment. Unlike hard label assignments where class labels are binary (i.e., positive for one class and a negative example for all other classes), soft label assignment allows: The positive class to have the largest probability While all other classes have a very small probability Pseudo Labelling - A Guide To Semi-Supervised Learning There are 3 kinds of machine learning approaches- Supervised, Unsupervised, and Reinforcement Learning techniques. Supervised learning as we know is where data and labels are present. Unsupervised Learning is where only data and no labels are present. Reinforcement learning is where the agents learn from the actions taken to generate rewards. Is it okay to use cross entropy loss function with soft labels? In the case of 'soft' labels like you mention, the labels are no longer class identities themselves, but probabilities over two possible classes. Because of this, you can't use the standard expression for the log loss. But, the concept of cross entropy still applies. In fact, it seems even more natural in this case.

MNIST For Machine Learning Beginners With Softmax Regression Published. Jun 20, 2018. This is a tutorial for beginners interested in learning about MNIST and Softmax regression using machine learning (ML) and TensorFlow. When we start learning programming, the first thing we learned to do was to print "Hello World.". It's like Hello World, the entry point to programming, and MNIST, the starting ... › machine-learning-algorithmMachine Learning Algorithm - an overview | ScienceDirect Topics Machine learning algorithms can be applied on IIoT to reap the rewards of cost savings, improved time, and performance. In the recent era we all have experienced the benefits of machine learning techniques from streaming movie services that recommend titles to watch based on viewing habits to monitor fraudulent activity based on spending pattern of the customers. [D] Instance weighting with soft labels. : MachineLearning - reddit Suppose you are given training instances with soft labels. I.e., your training instances are of the form (x,y,p), where x ins the input, y is the class and p is the probability that x is of class y. Some classifiers allow you to specify an instance weight for each example in the training set. Multi-Class Neural Networks: Softmax | Machine Learning - Google Developers Multi-Class Neural Networks: Softmax. Estimated Time: 8 minutes. Recall that logistic regression produces a decimal between 0 and 1.0. For example, a logistic regression output of 0.8 from an email classifier suggests an 80% chance of an email being spam and a 20% chance of it being not spam. Clearly, the sum of the probabilities of an email ...

Support-vector machine - Wikipedia In machine learning, support-vector machines (SVMs, also support-vector networks) are supervised learning models with associated learning algorithms that analyze data for classification and regression analysis.Developed at AT&T Bell Laboratories by Vladimir Vapnik with colleagues (Boser et al., 1992, Guyon et al., 1993, Cortes and Vapnik, 1995, Vapnik et al., … Label Smoothing Explained | Papers With Code Source: Deep Learning, Goodfellow et al Image Source: When Does Label Smoothing Help? Label Smoothing is a regularization technique that introduces noise for the labels. This accounts for the fact that datasets may have mistakes in them, so maximizing the likelihood of $\log{p}\left(y\mid{x}\right)$ directly can be harmful. What is the definition of "soft label" and "hard label"? A soft label is one which has a score (probability or likelihood) attached to it. So the element is a member of the class in question with probability/likelihood score of eg 0.7; this implies that an element can be a member of multiple classes (presumably with different membership scores), which is usually not possible with hard labels. › scaling-techniques-inScaling techniques in Machine Learning - GeeksforGeeks Dec 04, 2021 · b. Semantic differential scale: The semantic differential is a 7 point rating scale with endpoints related to bipolar labels. The negative words or phrase sometimes appears on the left side or sometimes right side.

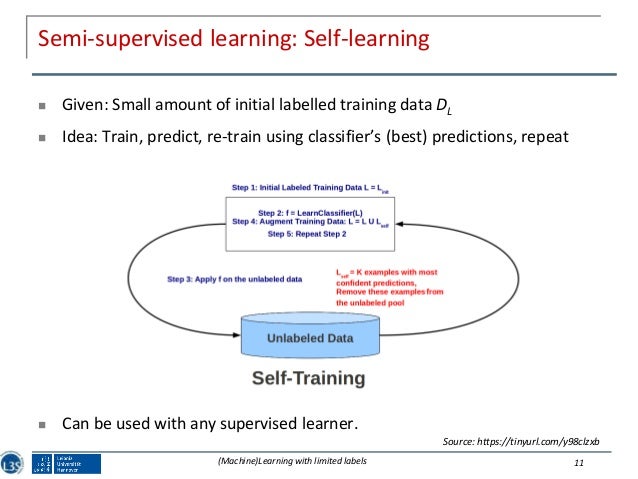

Machine learning - Wikipedia Machine learning (ML) ... Some of the training examples are missing training labels, yet many machine-learning researchers have found that unlabeled data, when used in conjunction with a small amount of labeled data, can produce a considerable improvement in learning accuracy. In weakly supervised learning, the training labels are noisy, limited, or imprecise; however, these …

Machine Learning Algorithm - an overview | ScienceDirect Topics Machine learning algorithms can be applied on IIoT to reap the rewards of cost savings, improved time, and performance. In the recent era we all have experienced the benefits of machine learning techniques from streaming movie services that recommend titles to watch based on viewing habits to monitor fraudulent activity based on spending pattern of the …

Learning classification models with soft-label information Materials and methods: Two types of methods that can learn improved binary classification models from soft labels are proposed. The first relies on probabilistic/numeric labels, the other on ordinal categorical labels. We study and demonstrate the benefits of these methods for learning an alerting model for heparin induced thrombocytopenia.

Scaling techniques in Machine Learning - GeeksforGeeks 04.12.2021 · Note: Generally the most preferred shampoo is placed on the top while the least preferred at the bottom. Non-comparative scales: In non-comparative scales, each object of the stimulus set is scaled independently of the others. The resulting data are …

PDF Empirical Comparison of "Hard" and "Soft" Label Propagation for ... tion (SP), propagates soft labels such as class membership scores or probabilities. To illustrate the difference between these approaches, assume that we want to find fraudu- ... arate classification problem for each CoRA sub-topic in Machine Learning category. Despite certain differences between our results for CoRA and synthetic data, we ob-

UCI Machine Learning Repository: Data Sets - University of … Machine Learning based ZZAlpha Ltd. Stock Recommendations 2012-2014: The data here are the ZZAlpha® machine learning recommendations made for various US traded stock portfolios the morning of each day during the 3 year period Jan 1, 2012 - Dec 31, 2014. 236. Folio: 20 photos of leaves for each of 32 different species. 237.

Efficient Learning of Classification Models from Soft-label Information ... to advance a relatively new machine learning approach pro-posed to address the sample annotation problem: learn-ing with soft label information (Nguyen, Valizadegan, and Hauskrecht 2011a; 2011b), in which each instance is asso-ciated with a soft-label reflecting the certainty or belief of

github.com › Advances-in-Label-Noise-LearningGitHub - weijiaheng/Advances-in-Label-Noise-Learning: A ... Jun 15, 2022 · A Novel Perspective for Positive-Unlabeled Learning via Noisy Labels. Ensemble Learning with Manifold-Based Data Splitting for Noisy Label Correction. MetaLabelNet: Learning to Generate Soft-Labels from Noisy-Labels. On the Robustness of Monte Carlo Dropout Trained with Noisy Labels.

How to Label Data for Machine Learning in Python - ActiveState 2. To create a labeling project, run the following command: label-studio init . Once the project has been created, you will receive a message stating: Label Studio has been successfully initialized. Check project states in .\ Start the server: label-studio start .\ . 3.

Research - Apple Machine Learning Research Explore advancements in state of the art machine learning research in speech and natural language, privacy, computer vision, health, and more.

What Are Features And Labels In Machine Learning | Machine learning, Learning, Coding school

Unsupervised Machine Learning: Examples and Use Cases Unsupervised machine learning is the process of inferring underlying hidden patterns from historical data. Within such an approach, a machine learning model tries to find any similarities, differences, patterns, and structure in data by itself. No prior human intervention is needed. Let’s get back to our example of a child’s experiential learning. Picture a toddler. The child knows …

The Ultimate Guide to Data Labeling for Machine Learning - CloudFactory In machine learning, if you have labeled data, that means your data is marked up, or annotated, to show the target, which is the answer you want your machine learning model to predict. In general, data labeling can refer to tasks that include data tagging, annotation, classification, moderation, transcription, or processing.

[2009.09496] Learning Soft Labels via Meta Learning - arXiv.org Learning Soft Labels via Meta Learning Nidhi Vyas, Shreyas Saxena, Thomas Voice One-hot labels do not represent soft decision boundaries among concepts, and hence, models trained on them are prone to overfitting. Using soft labels as targets provide regularization, but different soft labels might be optimal at different stages of optimization.

Using Machine Learning for Automatic Label Classification | Machine learning, Text analysis ...

[1906.02629] When Does Label Smoothing Help? - arXiv.org The generalization and learning speed of a multi-class neural network can often be significantly improved by using soft targets that are a weighted average of the hard targets and the uniform distribution over labels. Smoothing the labels in this way prevents the network from becoming over-confident and label smoothing has been used in many state-of-the-art models, including image ...

Label Smoothing: An ingredient of higher model accuracy These are soft labels, instead of hard labels, that is 0 and 1. This will ultimately give you lower loss when there is an incorrect prediction, and subsequently, your model will penalize and learn incorrectly by a slightly lesser degree.

What are labels in machine learning? - Quora Machine learning depends on a labeled set of data that the algorithm can learn from. This dataset is gathered by giving the unlabeled data to humans and asking them to make certain judgments about them. For example, the question might be: "Does this photo contain a car?" The labeler then looks at each photo and determines whether a car can be seen.

How to Label Data for Machine Learning: Process and Tools - AltexSoft Data labeling (or data annotation) is the process of adding target attributes to training data and labeling them so that a machine learning model can learn what predictions it is expected to make. This process is one of the stages in preparing data for supervised machine learning.

An introduction to MultiLabel classification - GeeksforGeeks To use those we are going to use the metrics module from sklearn, which takes the prediction performed by the model using the test data and compares with the true labels. Code: predicted = mlknn_classifier.predict (X_test_tfidf) print(accuracy_score (y_test, predicted)) print(hamming_loss (y_test, predicted))

ARIMA for Classification with Soft Labels | by Marco Cerliani | Towards ... In this post, we introduced a technique to carry out classification tasks with soft labels and regression models. Firstly, we applied it with tabular data, and then we used it to model time-series with ARIMA. Generally, it is applicable in every context and every scenario, providing also probability scores.

Pros and Cons of Supervised Machine Learning - Pythonista … Another typical task of supervised machine learning is to predict a numerical target value from some given data and labels. I hope you’ve understood the advantages of supervised machine learning. Now, let us take a look at the disadvantages. There are plenty of cons. Some of them are given below. Cons of Supervised Machine Learning

archive.ics.uci.edu › ml › datasetsUCI Machine Learning Repository: Data Sets A machine Learning based technique was used to extract 15 features, all are real valued attributes. 435. Productivity Prediction of Garment Employees: This dataset includes important attributes of the garment manufacturing process and the productivity of the employees which had been collected manually and also been validated by the industry ...

machinelearning.apple.com › researchResearch - Apple Machine Learning Research Explore advancements in state of the art machine learning research in speech and natural language, privacy, computer vision, health, and more.

What is the difference between soft and hard labels? 1 comment 90% Upvoted Sort by: best level 1 · 5 yr. ago Hard Label = binary encoded e.g. [0, 0, 1, 0] Soft Label = probability encoded e.g. [0.1, 0.3, 0.5, 0.2] Soft labels have the potential to tell a model more about the meaning of each sample. 5 More posts from the learnmachinelearning community 601 Posted by 2 days ago Tutorial

python - scikit-learn classification on soft labels - Stack Overflow Generally speaking, the form of the labels ("hard" or "soft") is given by the algorithm chosen for prediction and by the data on hand for target. If your data has "hard" labels, and you desire a "soft" label output by your model (which can be thresholded to give a "hard" label), then yes, logistic regression is in this category.

Semi-Supervised Learning With Label Propagation - Machine Learning Mastery Nodes in the graph then have label soft labels or label distribution based on the labels or label distributions of examples connected nearby in the graph. Many semi-supervised learning algorithms rely on the geometry of the data induced by both labeled and unlabeled examples to improve on supervised methods that use only the labeled data.

How to Label Image Data for Machine Learning and Deep Learning Training? | by Rayan Potter ...

weijiaheng/Advances-in-Label-Noise-Learning - GitHub 15.06.2022 · A Novel Perspective for Positive-Unlabeled Learning via Noisy Labels. Ensemble Learning with Manifold-Based Data Splitting for Noisy Label Correction. MetaLabelNet: Learning to Generate Soft-Labels from Noisy-Labels. On the Robustness of Monte Carlo Dropout Trained with Noisy Labels.

› blog › unsupervised-machine-learningUnsupervised Machine Learning: Examples and Use Cases - AltexSoft More often than not unsupervised learning deals with huge datasets which may increase the computational complexity. Despite these pitfalls, unsupervised machine learning is a robust tool in the hands of data scientists, data engineers, and machine learning engineers as it is capable of bringing any business of any industry to a whole new level.

machine learning - What are soft classes? - Cross Validated You can't do that with hard classes, other than create two training instances with two different labels: x -> [1, 0, 0, 0, 0] x -> [0, 0, 1, 0, 0] As a result, the weights will probably bounce back and forth, because the two examples push them in different directions. That's when soft classes can be helpful.

en.wikipedia.org › wiki › Machine_learningMachine learning - Wikipedia Machine learning (ML) is a field of inquiry devoted to understanding and building methods that 'learn', that is, methods that leverage data to improve performance on some set of tasks.

Pseudo Labelling Semi Supervised Learning Technique | Supervised learning, Machine learning ...

Post a Comment for "39 soft labels machine learning"